by Prof. Flor Lacanilao

Perhaps you are wondering why I should be speaking of R&D at a meeting of systematic biologists. One reason is that knowing R&D is as important to biological research as is systematic biology. If I am only able to persuade 10 percent of you to publish your papers in peer-reviewed international journals, I would have done my job. And if that 10 percent is raised to 20 then 30 in your succeeding meetings, then in a few years your organization would be one of the leading science organizations in the country. And you can aim to be the first with an all-published membership. This will be the clear indication of changed research environment in the Philippines.

My second reason is to remind you that your presentation in this meeting is not the last phase or conclusion of a research work. A scientific meeting is a chance to present your manuscript for comments (preliminary peer review), before it is subjected to the formal peer review when you submit it for publication in a primary research journal.

Thirdly, I hope my manner of presentation (e.g., how slides and accompanying handouts were prepared) will suggest to you how to present a paper orally. This is important because your way of presenting a paper, like in international meetings, will determine whether or not you get useful comments from scientists in the audience to improve your manuscript.

My talk starts with how research should be done; then I show some symptoms of the wrong research practice in the Philippines and their two major causes (the lack of funds is not one of them, but it is often made the excuse for poor performance). Finally, I give examples of how neighbor countries, which had done better in research, have moved ahead of the Philippines in national progress, or are on their way to leaving us behind. I use established objective indicators here to show the countries’ performance in research and development. Our failure to use them has been a major cause of the poor state of Philippine science.

1. Doing research properly

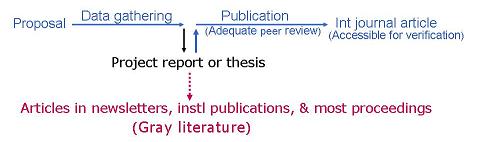

The established process of research has undergone over 3 centuries of development, since the publication of the first two scientific journals in London and Paris in 1665. It requires publication in a research journal that is adequately peer-reviewed and accessible for international verification of results (Fig. 1). The review and verification processes back and guard the integrity of the published paper. Examples are journals covered in Science Citation Index of Thomson ISI. The output is called a scientific paper or valid publication, as opposed to gray literature (information produced without adequate peer review). As a noted physicist says, “Just printing results doesn’t validate them.”

Many studies end as a project report or graduate thesis. In the Philippines, this is often the accepted completion of research or graduate training. If published, in most cases it appears as gray literature. Examples are papers in newsletters, institutional reports, most conference proceedings, and nearly all local journals. They have doubtful scientific value. Until today, only a small fraction of research papers we produce is published properly as scientific papers.

2. Symptoms and major causes of wrong research practice

Most of Philippine research publications are clear indications of wrong research practice, and they do not count in international rating of research performance when ranking nations, universities, or individuals; nor do they help in national progress. They are seen in the Reference Section of books, training manuals, bibliographies, extension publications, and review articles. In “Bibliography of Philippine marine invertebrates” (1994), for example, only 7 percent of the 1032 references listed is ISI-indexed or valid publication. In “Bibliography of Philippine seaweeds” (1990), only 8 percent of the 780 listed references is such publication. And in “Biology of milkfish” (1991), only 19 percent of the 298 cited literature is valid publication. Since there are now over 200 such articles on milkfish, the book is overdue for revision.

You may have seen training manuals or extension publications by local authors without a single, valid publication in the bibliography. I have yet to see a book by Filipino authors with a reference list dominated by publications covered in Thomson ISI’s major indexes (e.g., Science Citation Index and Social Sciences Citation Index), which are used in ranking nations and universities. We have been told, as far back as graduate training, that the integrity or reliability of a publication, primary or not, depends on the quality of the bibliography added to it.

The widespread practice of wrong research in the country continues because authors get promotion, recognition, or even award for gray literature. You see them writing science columns in newspapers. Worse, you see them invited to speak in recognition and commencement programs. Surely we can easily use valid publications as a minimum requirement for such functions. A simple change like this will guaranty a more science-literate public and properly trained graduate students, which can be the future leaders of our academic and science institutions.

For a developing country, which is short of adequately published researchers, an objective evaluation of performance is more reliable. The indicators often used are the number of valid publications (quantity) and the number of times these publications are cited (quality) (Table 1). Both can be obtained in the widely used Science Citation Index or any of Thomson ISI’s major indexes. See, for example, “The scientific impact of nations” (Nature, 430:311-316, 2004) at, http://www.nature.com/nature/journal/v430/n6997/full/430311a.html

Table 1. Relations between the quantity and quality of publications in performance evaluation

From The Scientist 14:31, 10 July 2000.

The rare use of such objective indicators, and the prevalent use of personal judgment by nonscientists (who lack valid publications), are the two major causes of the poor state of Philippine science and higher education. Personal judgment is the common way we evaluate research proposals and output when giving grants, appointments, promotions, and awards. While some reforms have been ongoing at the University of the Philippines, especially in the use of journals covered in Thomson ISI’s major indexes, elsewhere, attempts to practice peer review by nonscientists are widespread.

3. How research leads to development

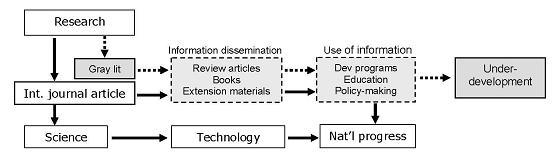

R&D and S&T are commonly used acronyms. But many hardly know their relation with each other — research to science to technology to development. These relations can explain how the two kinds of research output (gray literature and scientific paper or international journal article) are responsible for underdevelopment or development (Fig. 2). Depending on the quality of the information disseminated (through extension materials, books, or review articles), it will harm or help development programs, education, and policy-making. And these in turn determine a country’s state of development. For more discussion, see “Essentials of development” at, http://www.ovcrd.upd.edu.ph/index.php?option=com_content&task=view&id=462&Itemid=81

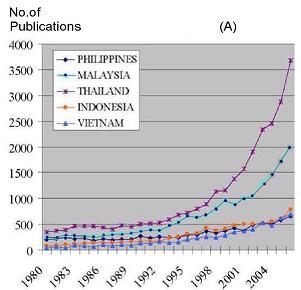

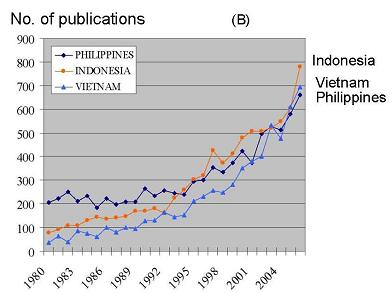

Using Science Citation Index Expanded, Katherine Bagarinao reviewed the publication performance (number of indexed articles) of five ASEAN countries from 1980 to 2006. She has shown with graphs that Thailand and Malaysia were ahead of the Philippines from 1980 (Fig. 3A), but the Philippines was ahead of Indonesia and Vietnam. The Philippines, however, was overtaken by Indonesia in the mid 1990s and by Vietnam in mid 2000s in number of publications (Fig. 3B). The Philippines is not only behind in publications, but it has also shown the slowest growth rate among the five countries throughout the covered period.

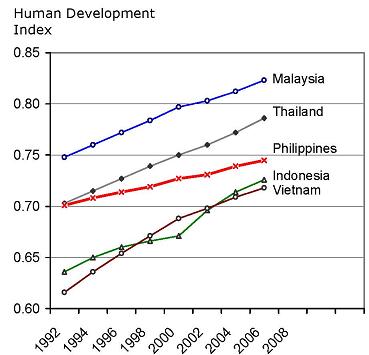

In assessing national progress, UNDP employs economic and social indicators — the Human Development Index (HDI). Using the data from the UNDP’s Human Development Index Trends for 1980-2008 (http://hdr.undp.org/en/statistics/data/motionchart/), I plotted the HDI trends of the five countries above to visualize their growth trends (Figure 4.) The performance of the five countries in research (Fig. 3) matches or corresponds with their performance in development (as measured by HDI). The Philippines, with its lowest scientific productivity, has also the lowest growth rate in development.

From 1980, only Malaysia was ahead of the Philippines (Its continued lead may be explained by its being an oil country). Then Thailand followed and surpassed the Philippines in 1992 and has been moving faster since. Although Indonesia and Vietnam are still behind the Philippines, the trend of their HDI growth rates has been increasing faster and, as shown by their growth curves and that of the Philippines, Indonesia and Vietnam are headed to overtake the Philippines in a few years. Note that Indonesia and Vietnam had left us behind in science in mid 1990s and mid 2000s (Fig. 3B).

Further, UNDP’s Human Development Reports show a nation’s development compared with those of other countries — ranking. Among 177 countries and territories, the Philippines’ ranking has been going down in the last 10 years. In 1997 and 1998, the Philippines ranked 77, but this dropped to 83-85 in 2000-2004, and to 102 in 2006.

As the director Peter Meyer of graduate studies in physics at Princeton says, “You need to know how to do research properly before you can begin to think about commercializing discoveries.”

I am glad (sad for our country) to see that poorer Africa has been, in recent years, establishing research universities and developing strong science academies to solve poverty. Note that Africa is getting to the bottom of poverty (a symptom) by attending to the basic cause of underdevelopment — poor S&T.

For more,

http://www.scidev.net/en/opinions/africa-needs-research-universities-to-fight-povert.html

http://www.nature.com/nature/journal/v450/n7171/full/450762a.html

4. Conclusion

Development depends on the quality of the research output, which in turn relies on correct research practice. Two ways to improve research: (a) by leaving to scientists the job of performance evaluation or (b) by using the established and objective indicators (e.g., journals and publication citations in Science Citation Index or Social Sciences Citation Index).

_______________________

Keynote address at the 27th Meeting of the Association of Systematic Biologists of the Philippines, National Museum, Manila, 29-30 May 2009

Flor Lacanilao, retired professor of marine science, University of the Philippines Diliman

florlaca @ gmail.com